As you can see, rosserial got a little upset at my procedure... and I'm not sure why. All 3 states still work in action, but I get lots of mean text as opposed to the approval you get from other message types. A lot of threads seem to indicate this is due to the relative message size and buffer size for the particular board, but I seem to be well within the limits of my ATMEGA32 board. I'm investigating it currently, but as it works, I'll also be moving on to something a lot more exciting.

Showing posts with label Drone. Show all posts

Showing posts with label Drone. Show all posts

Sunday, December 14, 2014

Drone Following #10: Added ROS capabilities to sketch

Tonight I added ROS functionality to Arduino sketch. It's just a few lines of code, but they have great implications! The LED state machine is now a Publisher and sends its information via the serial port, so now anything connected to ROS on my laptop can read what state the device is in. Logically, the next step is to start working with the Drone. Here are some screenshots from it in action:

Drone Following #9: Soldering, State Machine, Github.

I've been quite productive since my finals (finally) ended.

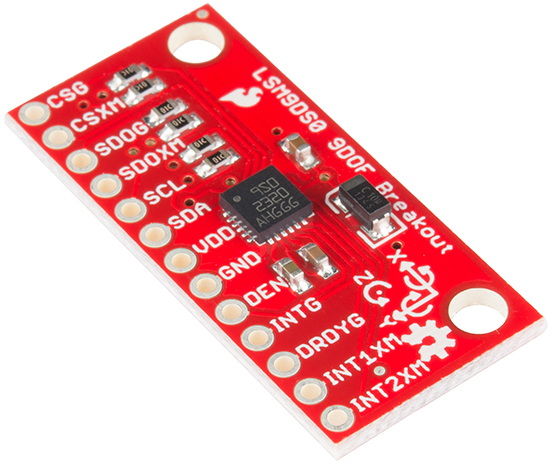

First of all, my chips arrived! I was quite impressed with how small they were! The LSM9DS0 is about the length of half your thumb, and the level converter only a third of that. Not long after they arrived, I snuck out to the school's tech lab and soldered them together. It was nice to get back in the saddle and sit down with the ol' soldering iron. It feels like knitting, but with heat. My work isn't what it used to be, but I think it's enough to get the job done.

Next, I enjoyed my break exactly as envisioned. Tonight I spent the night doing some programming, the beginning of the drone following project. This consisted of creating an 3 state finite state machine. Right now it doesn't do much other than turn on LEDs in a sequential order and via the serial port tell you what state it's in.

But this is very important! After all, when I integrate this with the Drone controls, you don't want it ever going to the wrong state at the wrong time... This could mean taking off or landing at the wrong time. That being said, there was also some fool-proofing to be done-- for example, the Arduino reads super quick (sometimes), so you need to put a time delay in for the button press, or it may quickly transition between states. I handled this by having it hold for a second in a loop if the button is held. Right now all of the states are working as intended, so the next move will be ROS integration-- sending the state to ROS. From here, the Drone will read it, and respond accordingly. The states are as follows: Landing Mode, Takeoff Mode (indicated by a yellow LED) and Calibration Mode (indicated by a green LED). Calibration is where the Drone begins to follow movement.

But this is very important! After all, when I integrate this with the Drone controls, you don't want it ever going to the wrong state at the wrong time... This could mean taking off or landing at the wrong time. That being said, there was also some fool-proofing to be done-- for example, the Arduino reads super quick (sometimes), so you need to put a time delay in for the button press, or it may quickly transition between states. I handled this by having it hold for a second in a loop if the button is held. Right now all of the states are working as intended, so the next move will be ROS integration-- sending the state to ROS. From here, the Drone will read it, and respond accordingly. The states are as follows: Landing Mode, Takeoff Mode (indicated by a yellow LED) and Calibration Mode (indicated by a green LED). Calibration is where the Drone begins to follow movement.Finally, I've made myself available on Github. It's been a while since I used it consistently, but I've realized that if I want to show people what I'm made of, I have to... show people what I'm made of. Therefore, I'll try to be consistent in uploading any code changes for your own viewing at my own github profile, Gariben. Here you can find the code for the state machine mentioned, as well as handy Fritzing diagrams and schematics. I'll usually include those in the blog, like so:

Currently, the code is housed under the "AirCat" repository. I didn't quite know what to name the project, since "Drone Following" will properly get me a pitchfork mob from an uneducated following, so I thought I'd just mimic the AirDog project that features the Drone remote, since the ideas are similar. Anyways, I hope to continue work on this project along with work to those I've dedicated myself too, so I'll try to keep everyone updated. Be sure to stay tuned to my Github for the latest-- it always comes at least a little before the blog.

Tuesday, December 2, 2014

Drone Following #7: At last, a sensor

Good news, everyone! I finally managed to get my hands on an IMU. I know it's been a while, but also, I think the professor that was going to supply me with one gave up. Luckily, I managed to pick one up from Sparkfun's great Cyber Monday sale. Not only am I surprised that anyone had a Cyber Monday sale, but also, that they were selling decent goods at decent prices. I picked up a 9DOF IMU (LSM9DS0) normally valued at $30.00 for just $15.00.

I'm glad that it's on it's way, because it means I can continue work on this exciting project. However, there will also be a lot of configuring and reading to do. This presents a good opportunity to learn more in-depth about IMUs, Gyroscopes, Accelerometers, and Magnetometers. I may do a write up on Camera Eye about what all I've learned, so I can share it with other people. If you'd like to investigate more about this chip yourself, Sparkfun has a guide here.

Anyways, as it stands, I'm currently wrestling with my last week of classes and finals, but I will be spending my winter break taking on projects like this one, and some other ones I have with friends, so stay posted!

P.S. Here's a video using the same chip for controlling a camera.

Labels:

Accelerometer,

DOF,

Drone,

Following,

Gyrometer,

Head tracking,

Lakitu,

ROS

Thursday, September 18, 2014

Drone Following #6: Buying the right sensor

I'm having a hard time choosing which sensor to buy for the Drone following project. It's just as much as a mathematical question as it is a computational one. Let me put it to you this way, an Accelerometer is associated with acceleration, and a Gyrometer is associated with velocity. If you understand the calculus relationships there, you can interpolate "the next integral up" (velocity from acceleration, position from velocity, etc.), but it comes with a fair amount of error.

What we need from this project is a way to detect a change in rotation from the person, as well as a change on the two dimensional plane. This could be done by mapping the person's theoretical position, but again, even with the velocity and acceleration information, it can get kind of scary. Thankfully, the angular velocity of the person rotating can easily be extracted with a gyrometer, but that leaves the more difficult question... what about movement?

An important thing to consider is also how you plan to implement the system. You could do it with a position-tracking system, or you could "take things down a notch" and think in terms of velocity. I chose the latter, after thinking back to my teleoperation node for the Pioneer. Everything you see the joystick doing is actually "telling" the robot to change it's velocity in one direction (with some scaling). That being said, I think the same could be done for this project. ROS communicates messages quick enough that, as long as things didn't get dicey, the Drone could follow the person's velocity, or at least I think.

This kind of ambiguity doesn't leave me in a good place to buy some equipment. Thankfully, since the consummation of my project and my Electronics course's project has finally occurred, I can lean on the department to buy a nice sensor (that they will own for other student projects hereafter) at no risk or expense to me. Therefore we went with a complex 9 DOF stick from sparkfun. After all, you can just ignore the aspects you don't need until you get to that point. And who knows, maybe if this goes well, this chip could be the kind of thing we'd want for the head tracking, and then maybe just a simple one-axis gyro on the back.

I imagine it'll be a little bit before it gets here, so I'll spend more quality time with my Drone. It'd be a blast to get it configured with ROS!

What we need from this project is a way to detect a change in rotation from the person, as well as a change on the two dimensional plane. This could be done by mapping the person's theoretical position, but again, even with the velocity and acceleration information, it can get kind of scary. Thankfully, the angular velocity of the person rotating can easily be extracted with a gyrometer, but that leaves the more difficult question... what about movement?

An important thing to consider is also how you plan to implement the system. You could do it with a position-tracking system, or you could "take things down a notch" and think in terms of velocity. I chose the latter, after thinking back to my teleoperation node for the Pioneer. Everything you see the joystick doing is actually "telling" the robot to change it's velocity in one direction (with some scaling). That being said, I think the same could be done for this project. ROS communicates messages quick enough that, as long as things didn't get dicey, the Drone could follow the person's velocity, or at least I think.

This kind of ambiguity doesn't leave me in a good place to buy some equipment. Thankfully, since the consummation of my project and my Electronics course's project has finally occurred, I can lean on the department to buy a nice sensor (that they will own for other student projects hereafter) at no risk or expense to me. Therefore we went with a complex 9 DOF stick from sparkfun. After all, you can just ignore the aspects you don't need until you get to that point. And who knows, maybe if this goes well, this chip could be the kind of thing we'd want for the head tracking, and then maybe just a simple one-axis gyro on the back.

I imagine it'll be a little bit before it gets here, so I'll spend more quality time with my Drone. It'd be a blast to get it configured with ROS!

Labels:

Accelerometer,

Calculus,

DOF,

Drone,

Following,

Gyrometer,

Head tracking,

Lakitu,

ROS

Drone Following #5: Getting ROS onto the Arduino.

Sorry for no updates in a while. If you haven't heard, I founded a robotics club, and I have a Differential Equations exam this week.

However, in my electronics course, I had some success uploading ROS onto the Arduino, which is certainly a step forward. I expected it to be a lot more difficult than it actually was, but their were a few tricky elements. I find that the stage of just getting everything installed is FAR more frustrating than actually programming and compiling.

The most confusing thing, which I'll be sure to specify here, is that, in Ubuntu, the Arduino's 'libraries' and 'tools' directories are not in the sketchbook directory like every other OS I can think of. It is instead in

With that out of the way, it's time to set sail for rosserial. Here you'll find a great set of tutorials as well as a good video explanation of why you would want to use ROS on an Arduino project:

In short, it's one of those many "reinventing the wheel" moments that comes as part of being a programmer. Also, if ROS supports whatever else you're working with, just think of ROS as the dinner party both of these members will attend. Now, I have a means of making the Drone communicate with the Arduino, my "sensor stick".

After getting through the installation bores, you can check the first tutorial, a sample publisher. You should find that it's pretty easy. When you finish you should have a new topic, "chatter", that contains a "Hello World" message from the Arduino!

This may not be impressive in itself, but imagine if we modified this a little bit. Imagine a theoretical sensor running from the arduino and writing that data to ROS. You now have valuable, instant information at your fingertips. So now it's time to get the Drone talking and order a sensor. I'll make a separate post about that arduous process.

However, in my electronics course, I had some success uploading ROS onto the Arduino, which is certainly a step forward. I expected it to be a lot more difficult than it actually was, but their were a few tricky elements. I find that the stage of just getting everything installed is FAR more frustrating than actually programming and compiling.

The most confusing thing, which I'll be sure to specify here, is that, in Ubuntu, the Arduino's 'libraries' and 'tools' directories are not in the sketchbook directory like every other OS I can think of. It is instead in

/usr/share/arduinoso don't let that trip you up if you want to add anything to your IDE.

With that out of the way, it's time to set sail for rosserial. Here you'll find a great set of tutorials as well as a good video explanation of why you would want to use ROS on an Arduino project:

In short, it's one of those many "reinventing the wheel" moments that comes as part of being a programmer. Also, if ROS supports whatever else you're working with, just think of ROS as the dinner party both of these members will attend. Now, I have a means of making the Drone communicate with the Arduino, my "sensor stick".

After getting through the installation bores, you can check the first tutorial, a sample publisher. You should find that it's pretty easy. When you finish you should have a new topic, "chatter", that contains a "Hello World" message from the Arduino!

This may not be impressive in itself, but imagine if we modified this a little bit. Imagine a theoretical sensor running from the arduino and writing that data to ROS. You now have valuable, instant information at your fingertips. So now it's time to get the Drone talking and order a sensor. I'll make a separate post about that arduous process.

Monday, September 1, 2014

Drone Following #4: Possible Hardware, Possible Expansions

I seem to have a lot of ideas whenever I'm biking. Perhaps it's that it just puts me where I would be when the project finally comes together. That, and I really enjoy pretending I'm on a lightcycle.

I've been thinking about the device to use more and more. The device we choose will be important, because it needs to guide the drone. The drone will essentially have no native information about it's orientation, and we want to supply that from the human being via a device. I believe I've settled on using an Arduino for the time being. They happen to be something I have in abundance and I have an upcoming project for one in an electronics class.

However, an Arduino alone won't do it. I was thinking about what kind of shield would be most appropriate for the situation. I thought a little bit about maybe a GPS shield, but for the time being I think that's a bit overkill. I was thinking actually of using a gyrometer shield. The idea of wearables excites me these day, so I was considering having the target wear the the arduino on his/her back, and having the drone figure its angle from the information on the Arduino. I was worried that this might be concern, with too many axes and too much sensitivity for extreme sports and the like; however, I saw that you can get a decent, low sensitivity two-axis gyroscope for a good price. This may be the key to acquiring information from the arduino. It may even need lowered sensitivity yet, but it only really needs to perceive the angle at which the person is facing. The extra axis can perhaps be used to better to connect the camera to the user.

The Math: So we have three basic factors we need to keep in mind here: distance from the user, and the angle the user is facing. Sounds like a job for Polar Coordinates! The reason I mention Polar Coordinates, is that as the user rotates, we want the drone to 1) change degrees to face camera at user, and 2) physically move behind the user in an arc. The change in degree is simple enough. If we imagine the gyroscope as facing the drone, then we the drone to maintain a -180 degree relationship to it. Perhaps before this adjustment is made, the drone can take this change in degree to calculate the arc to form, and then perform them simulatenously!

However, how to track movement has not yet been decided. It doesn't necessarily have to be true movement, in that it's constantly monitored and applied for us, but rather relative movement, similarly to how the gyrometer works: The Arduino just needs to know the amount of change, so it may be applied to the drone.

Possible Expansions: I should be getting this far ahead of myself, but when you're excited it's inevitable. It's what keeps projects interesting, and helps you seperate yourself from the rest of the pack. So, imagine this. Imagine if we applied all of the rules of the first arduino, to a second, head-mounted, arduino that affected the camera's movement: your eye in the sky. You're riding your bike, the drone is following. You tilt your head because you swear you spotted a wild sasquatch. The drone, maintaining it's following pace, tilts the camera to face the same direction, capturing 720p footage of the sasquatch and making you famous. I've got it all figured out. But really, this could be quite a useful feature! Additionally, if you were mathematical about the velocity, acceleration and time, you could have the camera only angle itself as the same theoretical time that you did, so almost like a transformation of you riding your bike, to a drone in the sky.

Understood limitations: It dawned on me right now that since this is ROS based I need to somehow have a laptop available during testing. I will be considering ways around this once I get to the point where everything is communicating properly (hint: it may involve a Raspberry Pi and a router).

Stay tuned for more updates!

------------------------------------------------------------------------------------------------------------

I've been thinking about the device to use more and more. The device we choose will be important, because it needs to guide the drone. The drone will essentially have no native information about it's orientation, and we want to supply that from the human being via a device. I believe I've settled on using an Arduino for the time being. They happen to be something I have in abundance and I have an upcoming project for one in an electronics class.

However, an Arduino alone won't do it. I was thinking about what kind of shield would be most appropriate for the situation. I thought a little bit about maybe a GPS shield, but for the time being I think that's a bit overkill. I was thinking actually of using a gyrometer shield. The idea of wearables excites me these day, so I was considering having the target wear the the arduino on his/her back, and having the drone figure its angle from the information on the Arduino. I was worried that this might be concern, with too many axes and too much sensitivity for extreme sports and the like; however, I saw that you can get a decent, low sensitivity two-axis gyroscope for a good price. This may be the key to acquiring information from the arduino. It may even need lowered sensitivity yet, but it only really needs to perceive the angle at which the person is facing. The extra axis can perhaps be used to better to connect the camera to the user.

The Math: So we have three basic factors we need to keep in mind here: distance from the user, and the angle the user is facing. Sounds like a job for Polar Coordinates! The reason I mention Polar Coordinates, is that as the user rotates, we want the drone to 1) change degrees to face camera at user, and 2) physically move behind the user in an arc. The change in degree is simple enough. If we imagine the gyroscope as facing the drone, then we the drone to maintain a -180 degree relationship to it. Perhaps before this adjustment is made, the drone can take this change in degree to calculate the arc to form, and then perform them simulatenously!

However, how to track movement has not yet been decided. It doesn't necessarily have to be true movement, in that it's constantly monitored and applied for us, but rather relative movement, similarly to how the gyrometer works: The Arduino just needs to know the amount of change, so it may be applied to the drone.

Possible Expansions: I should be getting this far ahead of myself, but when you're excited it's inevitable. It's what keeps projects interesting, and helps you seperate yourself from the rest of the pack. So, imagine this. Imagine if we applied all of the rules of the first arduino, to a second, head-mounted, arduino that affected the camera's movement: your eye in the sky. You're riding your bike, the drone is following. You tilt your head because you swear you spotted a wild sasquatch. The drone, maintaining it's following pace, tilts the camera to face the same direction, capturing 720p footage of the sasquatch and making you famous. I've got it all figured out. But really, this could be quite a useful feature! Additionally, if you were mathematical about the velocity, acceleration and time, you could have the camera only angle itself as the same theoretical time that you did, so almost like a transformation of you riding your bike, to a drone in the sky.

Understood limitations: It dawned on me right now that since this is ROS based I need to somehow have a laptop available during testing. I will be considering ways around this once I get to the point where everything is communicating properly (hint: it may involve a Raspberry Pi and a router).

Stay tuned for more updates!

------------------------------------------------------------------------------------------------------------

Thursday, August 28, 2014

Drone Following #3: Collaboration and Ideas

Haven't done much flying since the last post but quite a bit of logistical thinking-- both alone and with others. I have two friends interested in working on this project with me-- my girlfriend, Nicole Lay, a Computer Science graduate, and my friend, Aaron Bradshaw, an engineering student at University of Kentucky. You may have noticed the yet unfulfilled "AJ Labs" link over at leibeck.tk, and that's what we intend to put there. It's really touch and go while we figure out how we want to share our information, so for the time being just know they are contributing from close or afar.

In terms of the project, I've tried to make it into a basic logistics problem. What kind of information are we receiving, what information can we derive from this existing information, and how can we use this connectivity to achieve our goal. So let's do it this way:

The Problem: We want to be able to have the drone follow targets during a variety of activities.

Current Thoughts: Connecting the drone to another "theoretical" device in order to orient the drone towards the device, and to follow it.

Available Information: On the ardrone_automony github page, you can see a concise list of the accessible information:

Derivation and Communication: Of particular interest for my idea is the rotz parameter. If our "theoretical device" could have a similar parameter, but with certainty, we could line up the two devices on similar plane, and have the drone maintain a 180 degree relationship, facing the device at all times. And as long as the device maintained the ability to be moved relatively, this transformation could be applied to the drone. As you can see, most of this relies on a communication and transformation from the external device.

The Device: We're also putting a lot of emphasis on what the particular device could be. For the sake of my upcoming project for electronics course about microcontrollers, I may consider using an Arduino with a GPS shield, if that is indeed a possibility. It could expand to mobile devices like phones and tablets, but getting an app and setting it up to communicate with ROS could be even more difficult. We'll see, I suppose. I still need to try my luck at controlling the drone with ROS.

A side note: It would seem intuitive to purchase a GPS flight recorder for the drone, and maybe give it a better idea of where it is relative to its surroundings, but I feel like this would be "buying" my way out of the situation. I understand that we're talking about a drone and other device, likely bring the cost up past 300-400$, but it's really important to me as an aspiring Engineer to make the most of what's available, not only for the challenge, but to make to make the end result available to the largest group of users. In truth there are already systems that extreme sports athletes use, but I would like expand this type of system to this cheaper platform. That's what it's all about!

We'll see where this idea takes us. I may not update for a while, but I'll be sure to include my flight videos when I can.

------------------------------------------------------------------------------------------------------------

In terms of the project, I've tried to make it into a basic logistics problem. What kind of information are we receiving, what information can we derive from this existing information, and how can we use this connectivity to achieve our goal. So let's do it this way:

The Problem: We want to be able to have the drone follow targets during a variety of activities.

Current Thoughts: Connecting the drone to another "theoretical" device in order to orient the drone towards the device, and to follow it.

Available Information: On the ardrone_automony github page, you can see a concise list of the accessible information:

header: ROS message headerbatteryPercent: The remaining charge of the drone's battery (%)state: The Drone's current state: * 0: Unknown * 1: Inited * 2: Landed * 3,7: Flying * 4: Hovering * 5: Test (?) * 6: Taking off * 8: Landing * 9: Looping (?)rotX: Left/right tilt in degrees (rotation about the X axis)rotY: Forward/backward tilt in degrees (rotation about the Y axis)rotZ: Orientation in degrees (rotation about the Z axis)magX, magY, magZ: Magnetometer readings (AR-Drone 2.0 Only) (TBA: Convention)pressure: Pressure sensed by Drone's barometer (AR-Drone 2.0 Only) (Pa)temp : Temperature sensed by Drone's sensor (AR-Drone 2.0 Only) (TBA: Unit)wind_speed: Estimated wind speed (AR-Drone 2.0 Only) (TBA: Unit)wind_angle: Estimated wind angle (AR-Drone 2.0 Only) (TBA: Unit)wind_comp_angle: Estimated wind angle compensation (AR-Drone 2.0 Only) (TBA: Unit)altd: Estimated altitude (mm)motor1..4: Motor PWM valuesvx, vy, vz: Linear velocity (mm/s) [TBA: Convention]ax, ay, az: Linear acceleration (g) [TBA: Convention]tm: Timestamp of the data returned by the Drone returned as number of micro-seconds passed since Drone's boot-up.Derivation and Communication: Of particular interest for my idea is the rotz parameter. If our "theoretical device" could have a similar parameter, but with certainty, we could line up the two devices on similar plane, and have the drone maintain a 180 degree relationship, facing the device at all times. And as long as the device maintained the ability to be moved relatively, this transformation could be applied to the drone. As you can see, most of this relies on a communication and transformation from the external device.

The Device: We're also putting a lot of emphasis on what the particular device could be. For the sake of my upcoming project for electronics course about microcontrollers, I may consider using an Arduino with a GPS shield, if that is indeed a possibility. It could expand to mobile devices like phones and tablets, but getting an app and setting it up to communicate with ROS could be even more difficult. We'll see, I suppose. I still need to try my luck at controlling the drone with ROS.

A side note: It would seem intuitive to purchase a GPS flight recorder for the drone, and maybe give it a better idea of where it is relative to its surroundings, but I feel like this would be "buying" my way out of the situation. I understand that we're talking about a drone and other device, likely bring the cost up past 300-400$, but it's really important to me as an aspiring Engineer to make the most of what's available, not only for the challenge, but to make to make the end result available to the largest group of users. In truth there are already systems that extreme sports athletes use, but I would like expand this type of system to this cheaper platform. That's what it's all about!

We'll see where this idea takes us. I may not update for a while, but I'll be sure to include my flight videos when I can.

------------------------------------------------------------------------------------------------------------

Wednesday, August 27, 2014

Drone Following #2: Learning to Fly

This isn't a terribly programming heavy post, but it is essential to working with and understanding with something that flies-- especially with four rotors. I took my Drone out for its first flight, which was mostly successful. I didn't understand that there was actually quite a bit of settings to configure before flying, but even under less than optimal circumstances it performed quite well.

The battery life of these devices really isn't that developed yet, but because this is the POWER EDITION, it has high density batteries that boost it close to an hour. In terms of the settings, there's a few key ones to how you'll be flying the drone. Most important is the maximum tilt. This value determines what the acceptable amount of tilt is for the drone. However, this also affects your maximum speed, since, in order to stop, the device may have to tilt past the acceptable limit. Also important is the maximum rotation, which has essentially the same properties, but for rotational properties like turning. These two facts combined essentially form kind of an overall sensitivity. It's pretty difficult to crash and burn when both the tilt and rotation are turned down, so keep that in mind. If you're filming or trying to get shots with the onboard camera, I'd suggest this kind of setting, but I imagine with an external camera (attached to the USB port) like the GoPro, it's probably not too much of a problem.

There's also a set of lighter features that are good for optimizing your style of flight. Drones can be flown indoors and outdoors, so you there are flip switchs for things like where you're flying, which of the styrofoam hulls you're using, and then a few more gauges like maximum altitude (up to 100 feet), max vertical speed (how fast the drone raises and lowers, etc) and a few others. Before I begin experimenting I want to fly quite a bit to better how understand what behaviors the drone can tolerate, and what settings would best permit these behaviors. Whereas the app requires you open a menu (while flying D: ), whereas on ROS you could probably easily manipulate these settings on the fly.

Plus that, there's a few novelties such as flips (can be enabled over 30% battery) and "absolute control" (where it spins around and then you can move it relative to where you are), but I've not discovered any pleasing features.

I hope to log in a decent amount of hours flying, and, more importantly, safely landing before I proceed to use the driver. I'll try to make some of my videos and pictures available so you can maybe determine if you'd like one in the future.

The battery life of these devices really isn't that developed yet, but because this is the POWER EDITION, it has high density batteries that boost it close to an hour. In terms of the settings, there's a few key ones to how you'll be flying the drone. Most important is the maximum tilt. This value determines what the acceptable amount of tilt is for the drone. However, this also affects your maximum speed, since, in order to stop, the device may have to tilt past the acceptable limit. Also important is the maximum rotation, which has essentially the same properties, but for rotational properties like turning. These two facts combined essentially form kind of an overall sensitivity. It's pretty difficult to crash and burn when both the tilt and rotation are turned down, so keep that in mind. If you're filming or trying to get shots with the onboard camera, I'd suggest this kind of setting, but I imagine with an external camera (attached to the USB port) like the GoPro, it's probably not too much of a problem.

There's also a set of lighter features that are good for optimizing your style of flight. Drones can be flown indoors and outdoors, so you there are flip switchs for things like where you're flying, which of the styrofoam hulls you're using, and then a few more gauges like maximum altitude (up to 100 feet), max vertical speed (how fast the drone raises and lowers, etc) and a few others. Before I begin experimenting I want to fly quite a bit to better how understand what behaviors the drone can tolerate, and what settings would best permit these behaviors. Whereas the app requires you open a menu (while flying D: ), whereas on ROS you could probably easily manipulate these settings on the fly.

Plus that, there's a few novelties such as flips (can be enabled over 30% battery) and "absolute control" (where it spins around and then you can move it relative to where you are), but I've not discovered any pleasing features.

I hope to log in a decent amount of hours flying, and, more importantly, safely landing before I proceed to use the driver. I'll try to make some of my videos and pictures available so you can maybe determine if you'd like one in the future.

Drone Following #1: Features and Prospects

I acquired an AR Drone today for a good price.

I'm beginning to brainstorm what kind of projects to take on. I have an upcoming microcontroller project in an electronics class I may now utilize it for. The most interesting aspect to me, and really the selling point is the horizontal and vertical cameras, the horizontal capable of filming up to 720p. I already played around with the AR Freeflight app on both Ios and Android, and I was surprised by the options capable within the camera.

One of the aspects I really want to check out is ROS connectivity. A few people in my lab used these same parrot drones in the lab. I took a look at ar_driver and it appears to be roughly equivalent to a manual version of the AR freeflight app, which is convenient. There's also ardrone_autonomy, which I'll move to after an understanding of the driver.

My first project though I want to take a look at though is classic teleoperation. I like the free app, and it's great to get started, but I have my own gripes with it. First I want to figure out how to use the drone with my computer and then use some joysticks and other controllers. I have in my possession a Xbox 360 controller, and several wiimotes and nunchucks. I've seen a few interesting wiimote teleoperations that I would also like to investigate.

Next, I want to work on some automated following. I imagine this can be done one of two ways; either by human recognition or by relation to some mobile device or controller. I think the latter is clearly easier, so I'll likely be working on it first. However, I certainly want to enable Human Recognition at some point, for that would open up a whole new range of projects.

------------------------------------------------------------------------------------------------------------

Have anyone made any cool mods to a drone?

I'm beginning to brainstorm what kind of projects to take on. I have an upcoming microcontroller project in an electronics class I may now utilize it for. The most interesting aspect to me, and really the selling point is the horizontal and vertical cameras, the horizontal capable of filming up to 720p. I already played around with the AR Freeflight app on both Ios and Android, and I was surprised by the options capable within the camera.

One of the aspects I really want to check out is ROS connectivity. A few people in my lab used these same parrot drones in the lab. I took a look at ar_driver and it appears to be roughly equivalent to a manual version of the AR freeflight app, which is convenient. There's also ardrone_autonomy, which I'll move to after an understanding of the driver.

My first project though I want to take a look at though is classic teleoperation. I like the free app, and it's great to get started, but I have my own gripes with it. First I want to figure out how to use the drone with my computer and then use some joysticks and other controllers. I have in my possession a Xbox 360 controller, and several wiimotes and nunchucks. I've seen a few interesting wiimote teleoperations that I would also like to investigate.

Next, I want to work on some automated following. I imagine this can be done one of two ways; either by human recognition or by relation to some mobile device or controller. I think the latter is clearly easier, so I'll likely be working on it first. However, I certainly want to enable Human Recognition at some point, for that would open up a whole new range of projects.

------------------------------------------------------------------------------------------------------------

Have anyone made any cool mods to a drone?

Subscribe to:

Posts (Atom)