I seem to have a lot of ideas whenever I'm biking. Perhaps it's that it just puts me where I would be when the project finally comes together. That, and I really enjoy pretending I'm on a lightcycle.

I've been thinking about the device to use more and more. The device we choose will be important, because it needs to guide the drone. The drone will essentially have no native information about it's orientation, and we want to supply that from the human being via a device. I believe I've settled on using an

Arduino for the time being. They happen to be something I have in abundance and I have an upcoming project for one in an electronics class.

However, an Arduino alone won't do it. I was thinking about what kind of shield would be most appropriate for the situation. I thought a little bit about maybe a

GPS shield, but for the time being I think that's a bit overkill. I was thinking actually of using a

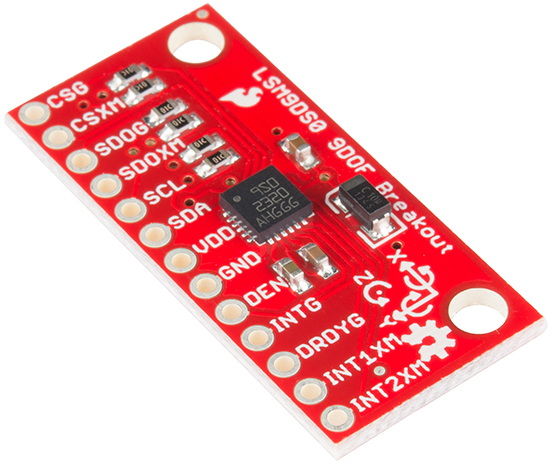

gyrometer shield. The idea of wearables excites me these day, so I was considering having the target wear the the arduino on his/her back, and having the drone figure its angle from the information on the Arduino. I was worried that this might be concern, with too many axes and too much sensitivity for extreme sports and the like; however, I saw that you can get a

decent, low sensitivity two-axis gyroscope for a good price. This may be the key to acquiring information from the arduino. It may even need lowered sensitivity yet, but it only really needs to perceive the angle at which the person is facing. The extra axis can perhaps be used to better to connect the camera to the user.

The Math: So we have three basic factors we need to keep in mind here:

distance from the user, and the

angle the user is facing. Sounds like a job for Polar Coordinates! The reason I mention Polar Coordinates, is that as the user rotates, we want the drone to 1) change

degrees to face camera at user, and 2) physically

move behind the user in an arc. The change in degree is simple enough. If we imagine the gyroscope as facing the drone, then we the drone to maintain a -180 degree relationship to it. Perhaps before this adjustment is made, the drone can take this change in degree to calculate the arc to form, and then perform them simulatenously!

However, how to track movement has not yet been decided. It doesn't necessarily have to be true movement, in that it's constantly monitored and applied for us, but rather relative movement, similarly to how the gyrometer works: The Arduino just needs to know the amount of change, so it may be applied to the drone.

Possible Expansions: I should be getting this far ahead of myself, but when you're excited it's inevitable. It's what keeps projects interesting, and helps you seperate yourself from the rest of the pack. So, imagine this. Imagine if we applied all of the rules of the first arduino, to a second, head-mounted, arduino that affected the camera's movement: your eye in the sky. You're riding your bike, the drone is following. You tilt your head because you swear you spotted a wild sasquatch. The drone, maintaining it's following pace, tilts the camera to face the same direction, capturing 720p footage of the sasquatch and making you famous. I've got it all figured out. But really, this could be quite a useful feature! Additionally, if you were mathematical about the velocity, acceleration and time, you could have the camera only angle itself as the same theoretical time that you did, so almost like a transformation of you riding your bike, to a drone in the sky.

Understood limitations: It dawned on me right now that since this is ROS based I need to somehow have a laptop available during testing. I will be considering ways around this once I get to the point where everything is communicating properly (hint: it may involve a Raspberry Pi and a router).

Stay tuned for more updates!

------------------------------------------------------------------------------------------------------------